Journey to K3S: Deploying the first service and its requirements

I have my K3S cluster up and running, and I’m ready to deploy my first service. I’m going to start migrating one of the simplest services I have running in my current docker setup, the RSS reader Miniflux.

I’m going to use Helm charts through the process since k3s supports Helm out of the box, but for this first service there’s also some preparation to do. I’m missing the storage backend, a way to ingress traffic from the internet, a way to manage the certificates and the database. Also, I need to migrate my current data from one database to another, but those are postgresql databases so I guess a simple pg_dump/pg_restore or psql commands will do the trick.

Setting up Longhorn for storage

The first thing I need is a storage backend for my services. I’m going to use Longhorn for this, since it’s a simple and easy to use solution that works well with k3s. I’m going to install it using Helm, and I’m going to use the default configuration for now.

| |

| |

This should generate all required resources for Longhorn to work. In my case I also enabled the ingress for the Longhorn UI to do some set up of the node allocated storage according to my needs and hardware, though I will not cover that in this post.

| |

| |

With this you should be able to access your Longhorn UI at the domain set up in your ingress. In my case it’s longhorn.k3s-01.home.arpa.

Keep in mind that this is a local domain, so you might need to set up a local DNS server or add the domain to your

/etc/hostsfile.

This example is not perfect by any means and if you plan to have this ingress exposed be sure to use a proper certificate and secure your ingress properly with authentication and other security measures.

Setting up cert-manager to manage certificates

The next step is to set up cert-manager to manage the certificates for my services. I’m going to use Let’s Encrypt as my certificate authority and allow cert-manager to generate domains for the external ingresses I’m going to set up.

| |

| |

In order to use Let’s Encrypt as the certificate authority, I need to set up the issuer for it. I’m going to use the production issuer in this example since the idea is exposing the service to the internet.

| |

| |

With this, I should be able to request certificates for my services using the letsencrypt-production issuer.

Setting up the CloudNative PostgreeSQL Operator

The chart for Miniflux is capable of deploying a PostgreSQL instance for the service, but I’m going to use the CloudNative PostgreSQL Operator to manage the database for this service (and others) on my own. This is because I want to have the ability to manage the databases separately from the services.

Miniflux only supports postgresql so I’m going to use the CloudNative PostgreSQL Operator to manage the database, first let’s intall the operator using the Helm chart:

| |

| |

This will install the CloudNative PostgreSQL Operator in the cnpg-system namespace. I’m going to create a PostgreSQL instance for Miniflux in the miniflux namespace.

| |

| |

With this a PostgreSQL cluster with two instances and 2Gi of storage will be created in the miniflux namespace, note that I have specified the longhorn storage class for the storage.

When this is finished a new secret with the connection information for the database called miniflux-db-app will be created. It will look like this:

| |

We are going to reference this secret directly in the Miniflux deployment below.

Deploying Miniflux

Now that we have all the requirements set up, we can deploy Miniflux.

I’m going to use gabe565’s miniflux helm chart for this, since they are simple and easy to use. I tried the TrueCharts chart but I couldn’t get it to work properly, since they only support amd64 and I’m running on arm64, though a few tweaks here and there should make it work.

| |

In order to customize Miniflux check out their configuration documentation and set the appropriate values in the

envsection.

| |

I’m using

CREATE_ADMIN: "0"to avoid creating an admin user for Miniflux, since I already have one in my current database after I migrated it. If you want to create an admin user you can set this to1and set theADMIN_USERNAMEandADMIN_PASSWORDvalues in theenvsection. See the chart documentation for more information.

This will create a Miniflux deployment in the miniflux namespace, using the miniflux-db-app database secret for the database connection.

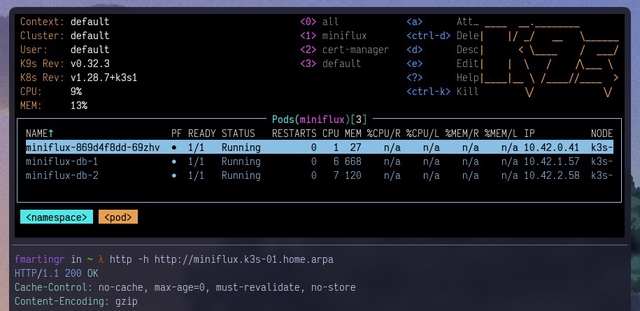

Wait until everything is ready in the miniflux namespace:

| |

Setting up an external ingress

I’m not going to cover the networking setup for this but your cluster should be able to route traffic from the internet to the ingress controller (the master nodes). In my case I’m using a zero-trust approach with Tailscale to avoid exposing my homelab directly to the internet but there are a number of ways to do this, pick the one that suits you best.

Setting up an ingress for the service that supports SSL is easy with cert-manager and Traefik, we only need to create an Ingress resource in the miniflux namespace with the appropiate configuration and annotations and cert-manager will take care of the rest:

| |

| |

This will create an ingress for Miniflux in the miniflux namespace and cert-manager will take care of the certificate generation and renewal using the letsencrypt-production issuer as specified in the annotations attribute.

After a few minutes you should be able to access Miniflux at the domain set up in the host field:

| |

And that’s it! You should have Miniflux up and running in your k3s cluster with all the requirements set up.

I can’t recommend Miniflux enough, it’s a great RSS reader that is simple to use and has a great UI. It probably is the first service I deployed in my homelab and I’m happy to have it running in my k3s cluster now, years later.