Journey to K3s: Basic Cluster Backups

There a time to deploy new services to the cluster, and there is a time to backup the cluster. Before I start depending more and more of the services I want to self-host it’s time to start thinking about backups and disaster recovery. My previous server have been running with a simple premise: if it breaks, I can rebuild it.

I’m going to try and keep that same simple approach here, theoretically if something bad happens I should be able to rebuild the cluster from scratch by backing up cluster snapshots and the data stored in the persistent volumes.

Cluster resources

In my case I store all resources I create in a git repository (namespaces, helm charts, configuration for the charts, etc) so I can recreate them easily if needed. This is a good practice to have in place, but it’s also a good idea to have a backup of the resources in the cluster to avoid problems when the cluster tries to regenerate the state from the same resources.

Set up the NFS share

In my case the required packages to mount NFS shares were already installed in the system, your experience may vary depending on the distribution you are using.

First I had to create the folder where the NFS share will be mounted:

| |

Mount the NFS share

| |

Check if the NFS share is mounted correctly by listing the contents of the folder, creating a file and checking the available disk space:

| |

With this I have the NFS share mounted and ready to be used by the cluster and I can start storing the backups there.

The cluster snapshots

Thankfully for this k3s provides a very straightforward method to create snapshots by either using the k3s etcd-snapshot command to create them manually or by setting up a cron job to create them automatically. The cron job is set up by default, so I only had to adjust the schedule and retention to my liking and set up a proper backup location: the NFS share.

Adjusting the etcd-snapshot-dir in the k3s configuration file to point to the new location, long with the retention and other options:

| |

After restarting the k3s service the snapshots will be created in the new location and the old ones will be deleted after the retention period.

You can also create a snapshot manually by running the command: k3s etcd-snapshot save.

Longhorn

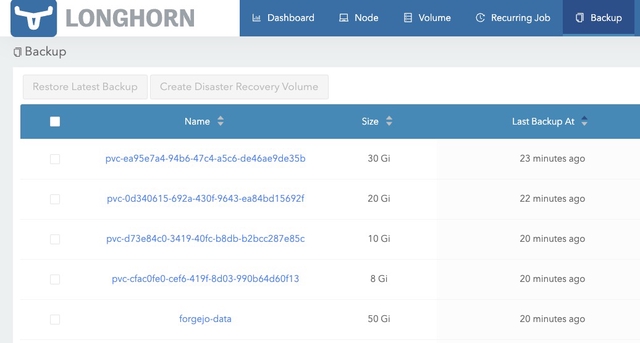

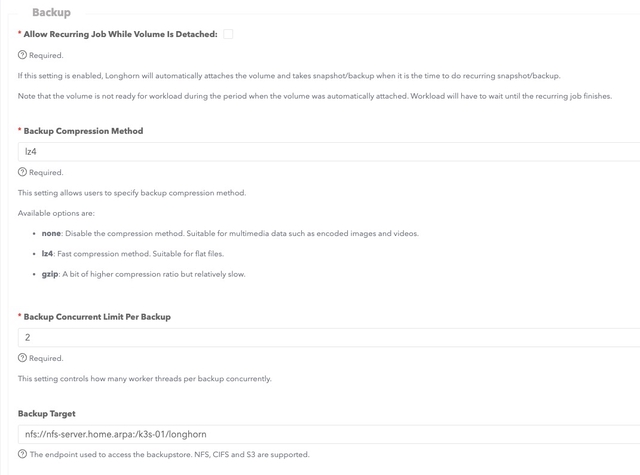

Very easy too! I just followed the Longhorn documentation on NFS backup store by going to the Longhorn Web UI and specifying my NFS share as the backup target.

After setting up the backup target I created a backup of the Longhorn volumes and scheduled backups to run every day at 2am with a conservative rentention policy of 3 days.

Conclusion

Yes, it was that easy!

With the backups in place I can now sleep a little better knowing that I can recover from a disaster if needed. The next step is to test the backups and the recovery process to make sure everything is working as expected.

I hope I don’t need to use this ever, though. :)